Steve Mann, John C. Havens, Geoffrey Cowan, Allissa Richardson, and Robert Ouellette

Quote: "Clothing is a building built for a single occupant, an architecture-of-one, and thus cyborg technology is urban design" -- Steve Mann, MIT Architecture, 1992.

In a world of Big Data centralization, we also need distributed “Little Data” like blockchain. “Wearables”, blockchain, and sousveillance are necessary to prevent fake news and stop corrupt surveillance.

The fundamental question humanity faces is one of having the right AND obligation to understand and document how machine intelligence is changing us. That process is not solely driven by police surveillance, but also includes a plethora of tracking activities across the social graph.

We must try to affect positive change, given the tsunami of powerful technologies available to disrupt our lives — for both good and evil. The answers–if they exist at all–are answers to wicked problems where the boundary conditions are not very well understood.

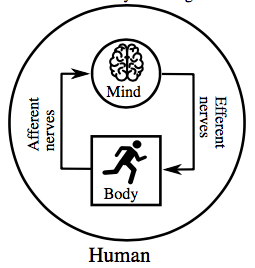

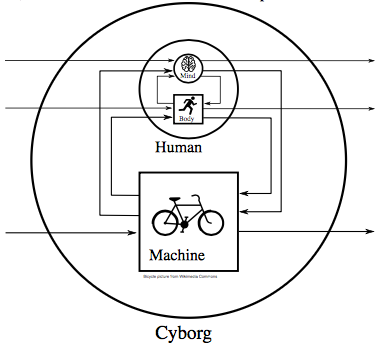

We shall begin with an attempt to humanise the discussion, beginning with a mind/body duality as a foundation for a cybernetic connection to the world’s ever more complex systems.

We attempt to bridge the key issue to how we humans innately adapt systems to our use, then expand and generalize this analogy.

Humanistic Intelligence

Our mind and body work together as a system. Our body responds to our mind, and our mind responds to our body:

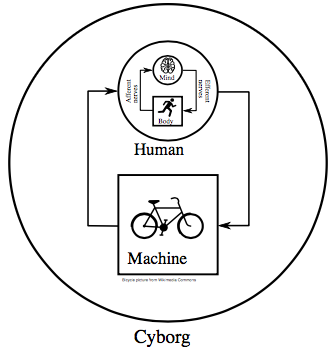

Some technologies like the bicycle also work this way. The technology responds to us, and we respond to the technology. After we’ve been riding a bicycle for a while, we forget that it is something separate from our body, and we begin to internalize it as part of us. Scientist, inventor, and musician Manfred Clynes coined the term “cyborg” for this special kind of relationship between a human and machine:

There is a symmetry in regards to both the completeness, and the immediacy in which the bicycle responds to us, and in which we respond to the bicycle. It senses us in a very direct way, as we depress the pedals or squeeze the brake handles. We also sense it in a very direct and immediate way. We can immediately feel the raw unprocessed, and un-delayed manner in which it responds to us, as well as the manner in which it responds to its environment. In the future, this concept will expand to include humans and bicycles with various kinds of sensors and actuators:

Other technologies like the automobile respond to us to even greater degrees. Just a very light touch on the accelerator and the automobile responds greatly to that small input from us. And today cars are starting to be equipped with cameras and other sensors that recognize us and our minute gestures. Soon cars will even tell when we’re feeling a little tired, or if we’ve had too much to drink. Some cars keep track of where we go, and even give the police and insurance companies a recording of our speed and acceleration and driving history. In this sense, cars are becoming “surveillant systems”, ie. systems that sense us and possibly report us to other authorities.

When our bicycle breaks down, we can understand it and fix it, but modern cars are a mystery to the average driver. The car knows a lot about us, but often reveals very little about itself.

Not everyone can, or event wants to, understand everything about how the world works, but it is in society’s best interest if at least some members of the society can understand how things work, beyond the companies that make them. When the manufacturer of a technology is the only entity that can understand it, there is an inherent conflict-of-interest, and an information asymmetry.

And when machines reveal their internal state, they can be trusted more. Much of the world we live in is being interconnected with technologies that are surveillant and centralized in nature, but this information flow is often one-sided. The information assymetry is like a body that has only (or primarily) a working afferent nervous system, but a broken efferent nervous system.

Big Watching and Little Watching

Information assymetry is a hallmark of a surveillant society in which information is collected, but not revealed to the people it is collected from. Moreover, some entities go as far as preventing individuals from collecting information:

In today’s society, we are all potential news reporters. We all have a right (and sometimes even a moral or ethical obligation) to tell our story. Whether a friend or a courtroom asks “where were you last night?” we have a right to, if we wish to, show where we were or were not. We might wish to prove we were not at the scene of a crime. Or we might wish to be able to prove we have done nothing wrong.

In today’s society, we are all potential news reporters. We all have a right (and sometimes even a moral or ethical obligation) to tell our story. Whether a friend or a courtroom asks “where were you last night?” we have a right to, if we wish to, show where we were or were not. We might wish to prove we were not at the scene of a crime. Or we might wish to be able to prove we have done nothing wrong.

When those with the authority of surveillance try to prevent sousveillance, we have a potential conflict-of-interest, and thus it is in the public interest to have multiple independent news sources that tell us what happened.

When a North Carolina officer threatened a motorist for recording himself (and the officer), social pressures rightly upheld the rights of the individual to tell his story [North Carolina officer demoted after telling Uber driver/lawyer he couldn’t record police, abc7NY WABC-TV, Monday, April 03, 2017].

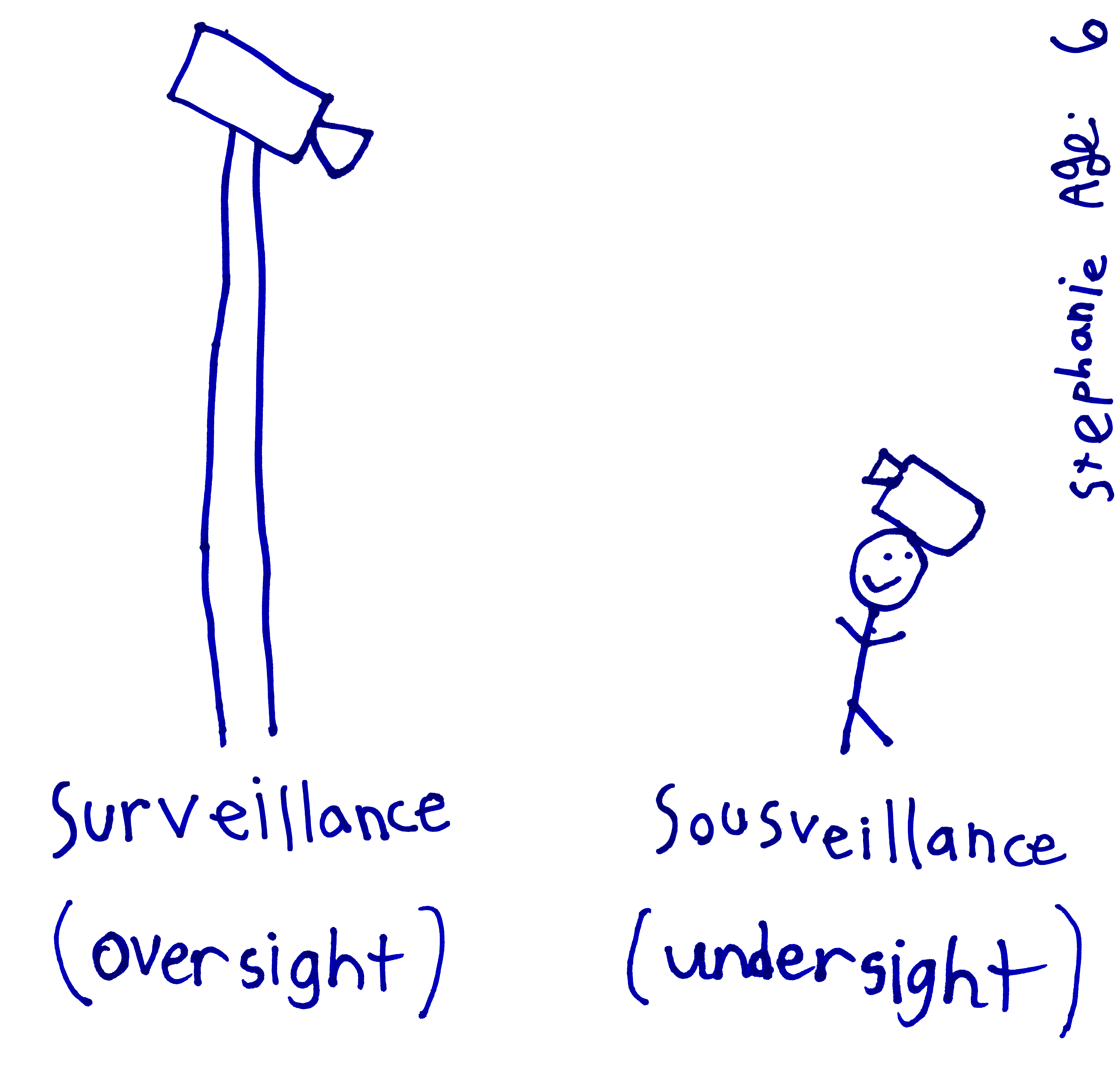

There is an inherent hypocrisy in “Big Data” and surveillance (“Big Watching”) that tries to suppress “little data” and sousveillance (“little watching”).

And the opposite of hypocrisy is integrity. Thus big data and surveillance often embody or sustain a lack of integrity. This lack of integrity can be mitigated through the introduction of distributed (little data) technologies like blockchain and sousveillance.

One possible solution is to automate the image capture, so that a perpetrator triggers the capture, and documents him or her self:

“[T]he personal safety device includes the use of biosensors where the quotient of heart rate divided by footstep rate… provides a visual saliency index. Suppose that someone were to draw a gun and demand cash from the wearer. The likely scenario is that the wearer’s heart rate would increase without an increase in footstep rate to explain it (in fact footstep rate would no doubt fall to zero at the request of the assailant). Such an occurrence would be programmed to trigger a “maybe I’m in distress” message to other members of the personal safety network…”

“Smart Clothing: The Wearable Computer and WearCam”, Steve Mann, Personal Technologies, Springer, March 1997, Volume 1, Issue 1.

“Big Watching” (surveillance) is when we’re being watched. “Little Watching” (sousveillance) is when we do the watching:

With the rise of AI (Artificial Intelligence), more and more it is machines that are doing the watching, rather than people. Thus the question will no longer merely be whether or not we have the right to record the police, but increasingly, whether or not we have the right (and responsibility) to record (and understand) machines and machine intelligence.

With the rise of AI (Artificial Intelligence), more and more it is machines that are doing the watching, rather than people. Thus the question will no longer merely be whether or not we have the right to record the police, but increasingly, whether or not we have the right (and responsibility) to record (and understand) machines and machine intelligence.

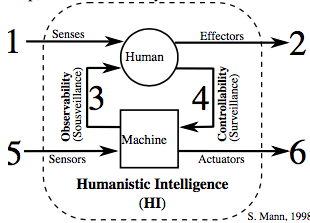

This question is at the heart of what Marvin Minsky (the “Father of AI”), Ray Kurzweil, and Steve Mann refer to as “Humanistic Intelligence” [Minsky, Kurzweil, and Mann, “Society of Intelligent Veillance”, IEEE ISTAS 2013, pp. 13-17.]

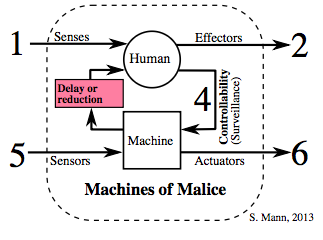

Humanistic Intelligence is machine learning done right, i.e. where the machine senses the human (surveillance) and the human senses the machine (sousveillance), resulting in a complete feedback loop, much like the way the human mind and body work together, or like a person and a bicycle work together:

Additionally, the human and machine both sense and affect their surroundings, so there are actually a total of six signal flow paths. But the most important ones for our discussion are those that form the feedback loop between human and machine.

Additionally, the human and machine both sense and affect their surroundings, so there are actually a total of six signal flow paths. But the most important ones for our discussion are those that form the feedback loop between human and machine.

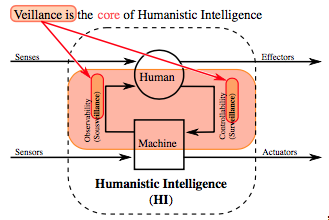

Thus veillance is at the core of HI:

and feedback delayed is feedback denied, e.g. delay or reduction in veillance results in machines that sometimes don’t serve humanity:

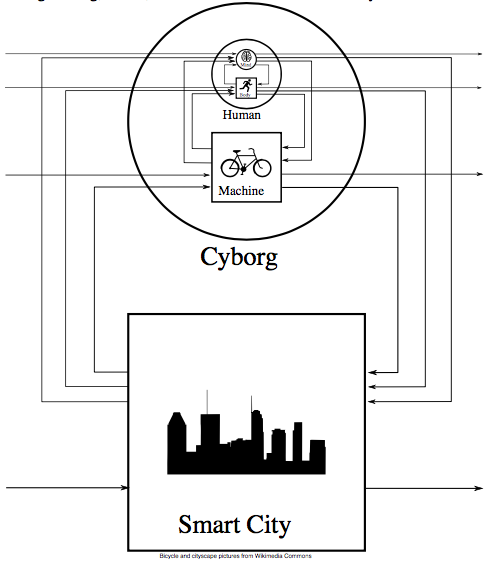

Thus if we are to have smart buildings, smart cities, etc., serve humanity, we must balance surveillance and oversight with sousveillance and undersight. Thus we must design smart cities, sensing, newsgathering, media, and social media as sousveillant systems in which we can sense the city that senses us at all levels:

We see here a kind of self-similar (fractal) architecture, from the cells and neurons in our body, to the microchips in our wearable computers, to the buildings, streets, and cities, the world, and the universe:

We see here a kind of self-similar (fractal) architecture, from the cells and neurons in our body, to the microchips in our wearable computers, to the buildings, streets, and cities, the world, and the universe:

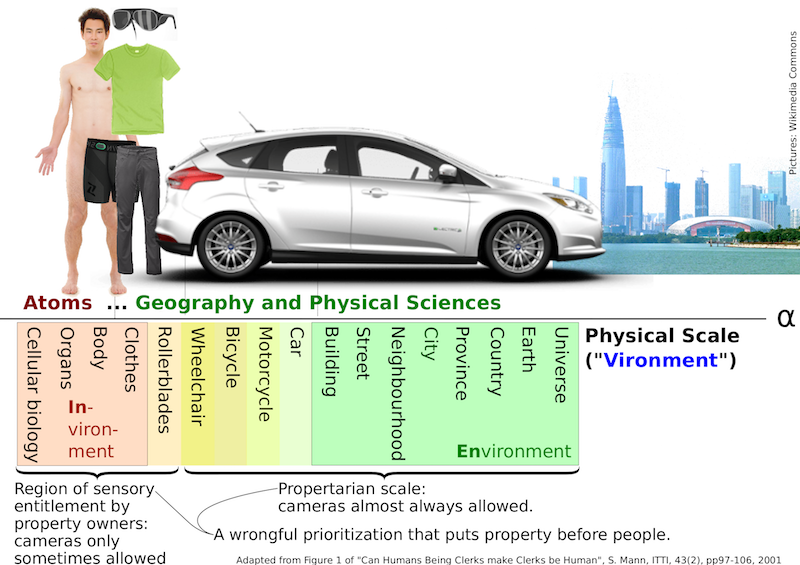

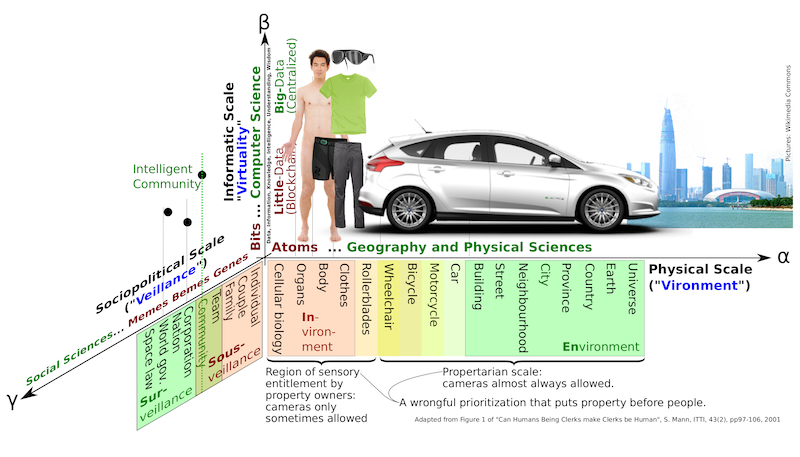

Along with this physical scale (from atoms to an individual human body, all the way out to the edge of the universe), we have also an informatic scale (from “Bits” to “Big Data”), and a human/social scale (from “Genes” to “Bemes” to “Memes”…), sousveillance (little watching) to surveillance (big watching):

We can think of this in a 3-dimensional space: blockchain (“little data”), wearables, and sousveillance:

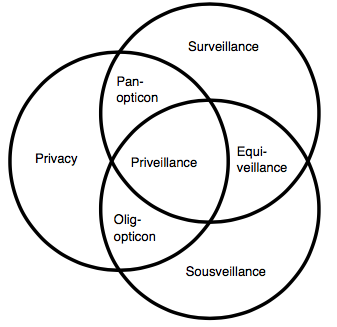

Privacy is often touted as a balance to surveillance, but when we combine privacy with surveillance, we get a world in which our neighbours don’t see us but the police and authorities do:

Privacy with surveillance is like the Panopticon prison, in which prisoners’ privacy is protected by isolating them from one another, while all remain visible to the guards. This is not true privacy.

Equiveillance is the equilibrium between surveillance and sousveillance, thus forming a foundation for priveillance (central equilibrium point in the diagram).

NEWStamp: Blockchain based ENGwear (Electronic NewsGathering wear)

Joi Ito credits Wearable Wireless Webcam as the world’s first example of mobile weblogging. This was an early form of electronic newsgathering that was distributed, wearable, and web-based. The idea was that individual people in their day-to-day life could report news as it happens, serendipitously:

(WearCam: world’s first wearable web-based newsgathering)

The idea here is to fight fake or biased news by transmitting raw unfiltered news as-it-happens, in realtime, so there is no time or opportunity to “spin” or bias or falsify the news. News reports are cryptographically timestamped and widely distributed.

More recently, we have proposed NEWStamp, a forward AND reverse timestamp, specifically designed for newsgathering to use blockchain to fight fake news. NEWStamp works by projecting news items onto the surrounding environment, and recapturing them using ENGwear (wearable newsgathering technologies):

IEEE i-Society, 2013

Fighting fake news with Blockchain + Wearables + Sousveillance

NEWStampTM system for fighting fake news. News such as New York Times front page, is projected onto the real world by way of a wearable data projector, and then forms part of the captured live video of other newsworthy activity from day-to-day life. The NEWStamp prototype ENGwear system consists of a wearable newsgathering camera combined with a wearable newsputting projector.

Conclusion + summary

Although “Big Data”, Surveillance, and Environmentalism (“Big Space”) are important, they must be balanced with “Little Data” (e.g. blockchain), Sousveillance (i.e. “Little Watching”) and Invironmentalism (“Little Space”, e.g. “wearables”).

The combination of wearables, blockchain, and sousveillance is essential to combatting fake news. In the future, we hope that none of us are prevented from telling the truth, and from collecting the data that we need in order to tell our story, using the technology of the day.

Going further:

There is much work to be done. For example, how would we build a service out of the process of time-stamping and inverse time-stamping to address fake news? Additionally, when it is so difficult to get real news, fake news can flourish in this partial vacuum. As the news industry dies, there is a last gasp of panic as so many of the news sources disappear behind paywalls or registration. When real news is no longer freely accessible (or becomes less accessible), fake news has more room to prosper.

We’ve reached a time when a web search returns results that look promising but fail to show what we searched for. What we need is a search engine that pre-checks to make sure the thing we’re looking for is actually in the link. Imagine a search engine that takes you right to the place in a document that contains the term you searched for.

Suicurity

Sousveillance as a social contract requires a counterpart to security. Suicurity (self care) is to security as sousveillance is to surveillance.

What the world needs as not privacy, but, rather, priveillance. We’re entering a world where every streetlight has at least one camera hidden inside it, to track traffic flow and lead us toward zero traffic fatalities. It is as futile to try to stop this surveillance as it is to try to stop a blind person from wearing a camera to see properly. So instead of trying to restrict surveillance, what we need to do is turn it into veillance. Taking the politics out of smart cities simply means making the data available to everyone rather than a select few. Where Google’s smart city (“Gooveillance“) in Toronto failed, a more priveillant smart city will prosper (starting with a pilot to install a few hundred smart city streetlight camera nodes in the downtown core, under principles of priveillance rather than “Gooveillance”).

The other important idea is the notion that we should regard surveillance as creating a spoliation of evidence whenever sousveillance is prohibited or not provided. Thus it ought to be a requirement that the data be open (i.e. that it be priveillant) if it is to have the potential to be used against us. We need an app like “Vericop” to create MAI (Mutually Assured Identification), so that officials who request our identification (“show your papers!”) be identified.

Optimum Insanity

Insanity, it is often said, is “doing the same thing over and over again and expecting a different result”. Yet that’s basically what we’re being told to do! When the computer at the office doesn’t work, we’re told to “run it again” (i.e. to do the same thing over and over again until we get the different result of it working), or reboot it, and try exactly the same thing over and over again.

In this sense, insanity has become a requirement of functioning in the modern (computational) world!

Accomodating Intelligence

Perhaps the most radical idea we might proffer is that human intelligence be declared a disability in the world of machine intelligence. It is not so much that smart cities are making us stupid, but more likely that smart cities weed out human intelligence, or are unfriendly to it.

Many highly intelligent people have difficulty using an API (Application Prisoner’s Interface) or SDK (System Detention Kit), i.e. super smart people often work better “close to the metal” at the lower level of assembly language and machine code than they do using high-level programming. In some sense, our modern world shuts these people out.

To the extent that high degrees of human intelligence might be declared a disability, it might be possible to enforce accomodation. In this way, accessibility requirements could be set forth along the lines of free+open source computing as a social movement.

To accomodate persons of various different abilities, it could be required that there be direct access (bypassing any proprietary SDK or API) to computing systems, such that we celebrate diversity. Persons with extreme intelligence might then no longer be marginalized outliers, but, rather, differently abled members of society who can be welcomed into the world of machine intelligence at whatever level they work best at. Such persons, who now represent a minority, might no longer be excluded from participation in many computational intelligence activities.

Along with the prevalence of fake news, and fake reality, there is also a kind of witchcraft science, akin to Plato’s cave, in which people have come to accept a world they can’t understand. We’ve become acclimatized to closed-source secrecy, and might otherwise have entire cities a person can’t understand, no matter how intelligent they might be. We must not build smart cities that exclude smart people.

Media must be directly accessible in the chosen format of the recipient, outside any browser, app, or viewer, for those who wish to construct their own way of seeing the computational world. The differently-abled, whether blind, partially sighted, or of an unusual intelligence (unusually high or unusually low) must be able to freely access content and participate in whatever way works best for them.

Smart cities must accomodate smart cars. And smart cars must accomodate smart people. Not everyone wants a car with an “Idiot Light” (“Check Engine Light”). Some users actually want to understand their enginine or electric motor intimately, and should not be denied the capacity to understand the world in the way that works best for their differently abled mind. Some technology simply doesn’t belong in our smart world.